It's not you, it's Google: how to overcome the Google Places API limit of 120 places

Your extended go-to guide on how to use the Google Maps Scraper geolocation features to the fullest.

Need to scrape more than 120 places on Google Maps in a large city? What about hundreds of thousands or even millions of places across the whole country or maybe even a continent? Is there a tool able to do that? All is possible with the right Google Maps API. This guide to using Google Maps Scraper 🔗 will show you how to overcome the limits of scraping Google Maps in 4 different ways.

What are Google Maps limitations? ⛔

Before we start with the Google Maps API, we need to understand the website we're working with. Let's take New York City's restaurants as an example to showcase what Google Maps can do.

When browsing the Google Maps website as a user, you might notice a peculiar limitation. No matter how many places there are in a city, at some point, you will run out of places that are displayed. The helpful sidebar on the left will say You've reached the end of the list.

The scrollbar on the left always stops at 120 places 🤔

If you count how many items actually are on this list, you will always end up with 120 at most. The same will happen if you try to search even a bigger area (a state, for example) - 120 places displayed max.

But there are definitely more than 120 restaurants in New York City or New York State. This display limitation of Google Maps is there for a reason (better UX), but this is not the limitation you're expecting when scraping that data. So how do we overcome it?

To overcome the Google Maps limits of 120 places, we created Google Maps Scraper 🔗. It's a tool that can double as both scraper and an unofficial Google Maps API. It can find or scrape hundreds of thousands of places at a time. Let's explore how it works.

Creating map grids 🗺

There's no magic here. What Google Maps Scraper 🔗 will do is split the large map into many smaller maps. It will then search or scrape each small map separately and combine the end results.

How a scraper creates a map grid

Why would the scraper do this, you ask? Besides, each small map seems empty from this distance (no pins visible 👀), would there be any results at all? To find an answer, we need some more detective work on the Google Maps website.

Automatic zooming on Google Maps 🔎

If you zoom out from the map far enough, you won't see many places on the map, only city and state names. The further you zoom out, the fewer places (represented by Google Maps pins 📍) you can see, and the lower the zoom level is.

Zoom 5 on Google Maps

Zoom 1 on Google Maps = the whole planet, 21 = tiny street. You can always check the zoom level in the URL. For instance, to display the whole East Coast, Google Maps uses 5 for zoom level (as seen in the URL). But the more you zoom in, the higher the level is, the more detailed the map is, and the more places it displays.

For our New York City example, compare the number of pins at zoom level 16 (closer) vs. zoom level 15 (further). Can you see how many places are missing in the latter?

Same map, different zoom levels, and different number of results visible

This zoom difference would influence your API results as well because - you've guessed it - the 120 places limitation applies no matter the zoom level. So how does the scraper deal with this issue?

The scraper will split the NYC map into a number of smaller, more detailed mini-maps. For each map, the zoom level will be adjusted automatically to the most optimal and rich-in-results level (usually 16), so the mini-maps won't be empty. This way the scraper will be able to find and scrape every single place on a small map. It's important to note that scraper does allow you to override the standard zoom level and get even more results (but for higher usage of compute units).

Finally, the scraper sums up and patches together the results of all mini-maps. That way we can get accurate map data from the whole city of New York, which makes this method the best for large-scale scraping.

However, this method's advantage comes at a cost: while being thorough, map grids can be quite slow and consume a lot of credits. There might be very few restaurants on the mini-maps, way less than 120, so why does it take so long to scrape them? The reason is every small map is a separate page the scraper has to open. So that's one extra request for the scraper to do, hence extra work, hence extra consumed credits.

To recap, here's how the scraper can do its job and find all places on Google Maps:

You provide the scraper with a set of coordinates: country, city, state, county, or postal code

The scraper splits this map into smaller maps

It also automatically adjusts the zoom level on each minimap to reach the maximum density of pins

The scraper extracts the results from each mini-map

The scraper combines results from all mini-maps

4 ways to use Google Maps Scraper

As it has become increasingly popular, Apify's Google Maps API has evolved to answer the demand for quality Google Maps scraping in many ways. We now have at least 4 different ways to scrape the same location: by using City-Country parameters, Postal address, URLs, and Custom geolocation. But that came with a price: it takes a bit of a learning curve to apply each of these methods.

🛂 Level 1: City-Country-Search term (simple)

So let's start with the obvious way: filling out City-Country-Search term parameters, aka the first few fields.

Indicate what you are searching for (restaurants) and where (New York, United States). Then hit Start ▷ and the scraper will do all the map grid work we've been discussing above.

Seems pretty straightforward; what can go wrong here? Sometimes when it comes to inserting the city name, a user will write both the city name and search category in Search terms. In this case, the search will be correct, but the map grid function will not apply and you will be limited to 120 results.

Therefore, don't use the Search term for city names. Instead, put the city name where it belongs - the City field. And leave the Search term for types of places: restaurant, cafe, vegan museum - whatever category of place you're searching for. Here are just a few examples of what you can put in the Search term:

... but also gas stations, bars, parks,

All in all, Search term is what separates you from scraping all places possible instead of a specific category of places. So don't forget this field unless you need absolutely everything that's on the map.

📮 Level 2. Postal address (simple)

For this method, you will have to define the Country, State, US County if applicable, City & Postal code of the area that interests you. In our case, we're scraping all restaurants in Syracuse by knowing the city specs. The more specs, the better results. And don't forget to fill out the Search term as well!

For the most accurate search, fill out as many fields as you can in this section

This method is good for scraping areas by postal code or smaller cities and towns.

The scraper will once again create map grids and adjust the zoom level in the background; no need to set that up. Just hit the Start ▷ button.

🔗 Level 3. One field only - URL (medium difficulty)

For this method, we will need to forget the previous approaches and instead go to Google Maps website first. Find the area+things you need scraping and detect their URLs. For instance, here's how you detect all restaurants on Long Island: find Long Island, type in restaurants, and move around the island. Notice each part of the island will have its own URL.

Find the URLs of the Google Maps area of your interest

Now copy each URL and paste it into the Use a Google Maps URL field. No other fields are needed for this method, and you can add as many or as few URLs as you want before hitting Start ▷.

Copy-paste each URL into the respective field

Essentially, in this case, you're doing the scraper's job by making your own map grid. You don't need the Search term in this case because you've already searched for the restaurants in Long Island on Google Maps, and your search became part of the URL (yeah, even with the typo 😉).

This option will give you better control over the map. However, you have to keep in mind that each URL will never get over the 120 results limit ⚠️

📡 Level 4: Custom geolocation in Google Maps (boss level)

If you don't want to rely on the scraper correctly creating the map area based on location parameters, you can customize it. Here are a few interesting ways you can adjust the scraper:

Circle

This is where we dive into a bit of the geometry meets JavaScript part. A circle feature is very useful for scraping places in specific, typically circular and dense areas such as the city center.

If you don't care about the outskirts, this is your best option.

Let's scrape an area in a circle shape

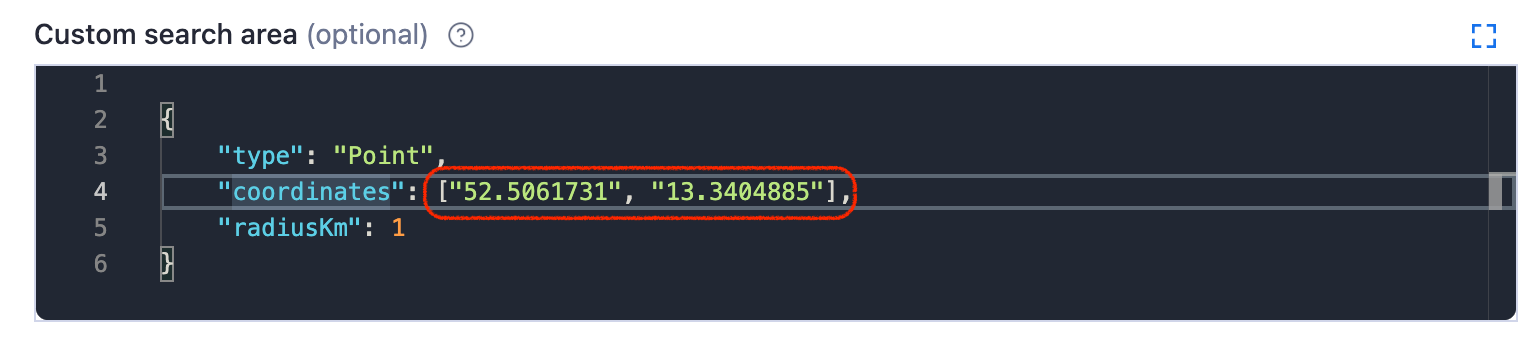

First, define the focus of your circle on Google Maps (longitude and latitude):

One way to find the coordinates is directly in the URL

Circle parameters in the Custom search area field

... then add your circle coordinates into Custom search area field. Don't forget to pick the kilometer radius of how far your circle should reach.

Polygon

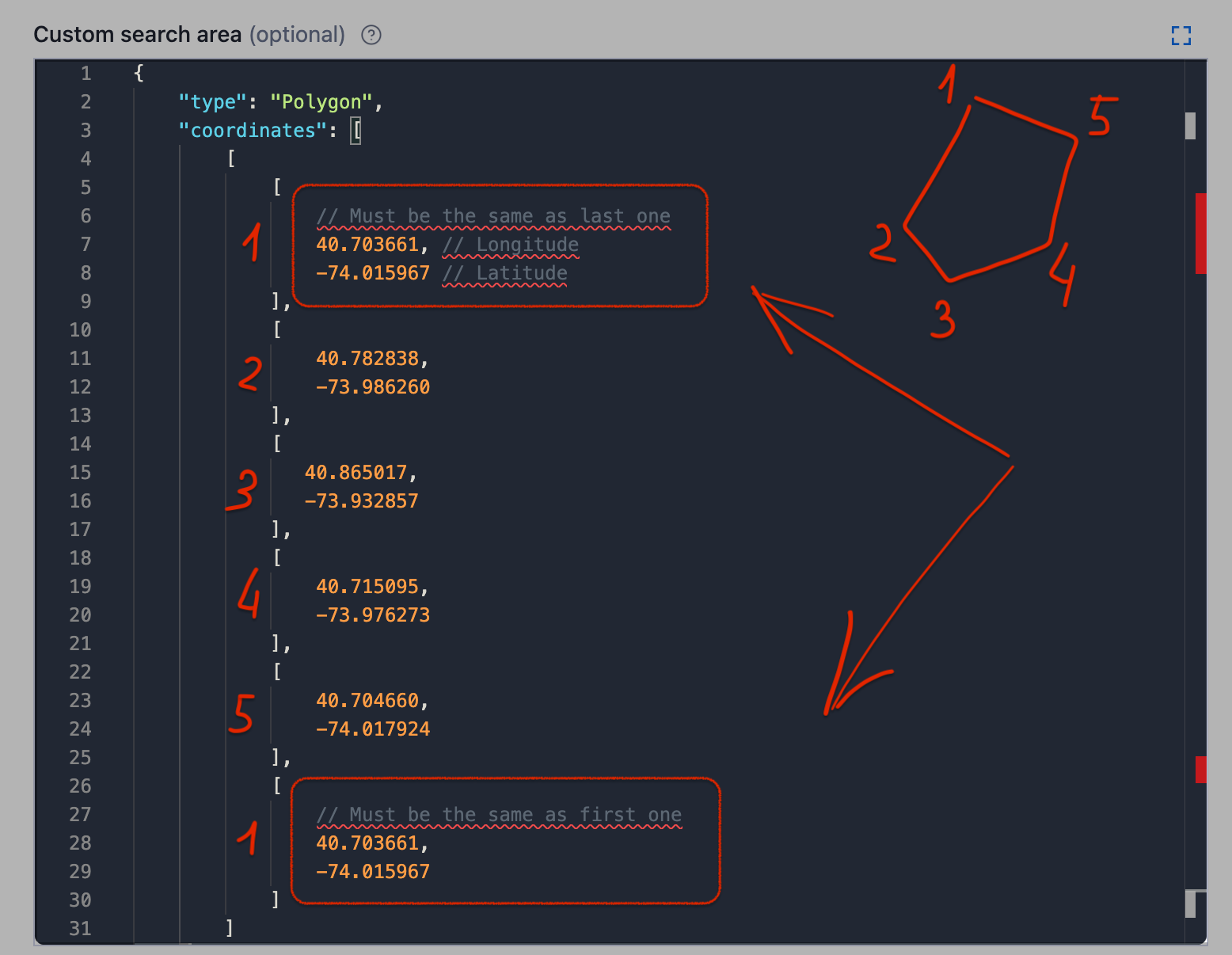

Do not be intimidated – a polygon uses the same principle for interacting with Google Maps coordinates as the circle. However, it involves more than one coordinate pair.

Polygon is perfect for custom or unusual shapes (a district, a peninsula or an island, for example). It can have as many points as you need (triangle, square, pentagon, hexagon, etc.)

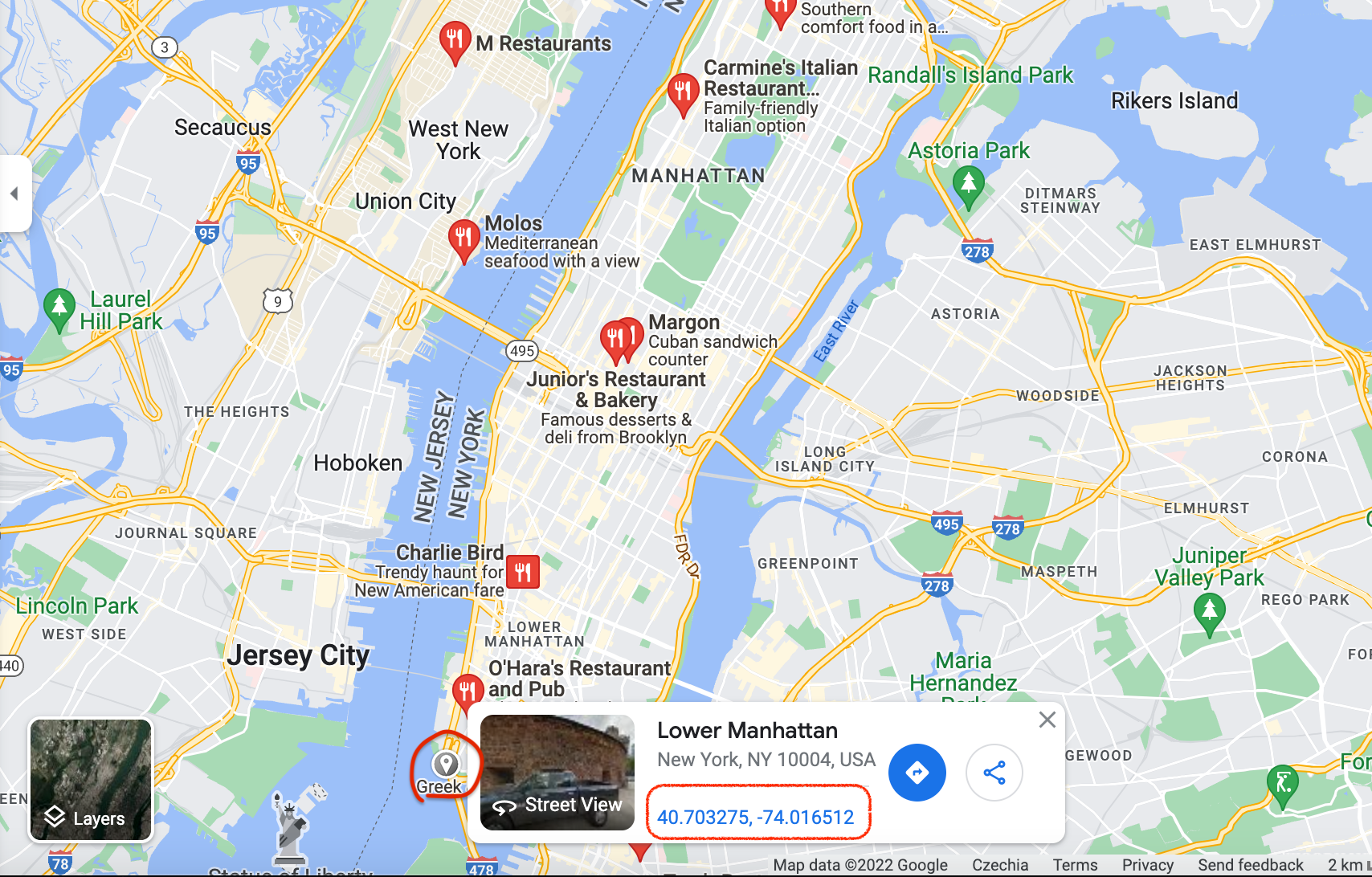

To form a free polygon, just pick a few different points on the map and take note of their coordinates. You can find any pair of coordinates by clicking anywhere on the map:

Click anywhere on the map to reveal the coordinates

You can add as many points as you want to define your area in the most accurate shape possible. We're gonna go with the pentagon shape. Now copypaste each coordinate pair in a sequence into the Custom search area. This is what creating a pentagon for part of Manhattan would look like:

Don't forget to close the polygon by making sure the first and the last coordinates are the same

And click Start ▷! Of course we could add more points to this shape to make it more accurate for the island, but you get the gist. Use the readme 🔗 as your formatting guide. It's also important to note that the Google Maps polygon is no less of a powerful feature than the previous options; you can count on this one if your priority is speed, consuming fewer platform credits, or scraping a specific area with unusual geometry.

Multipolygon

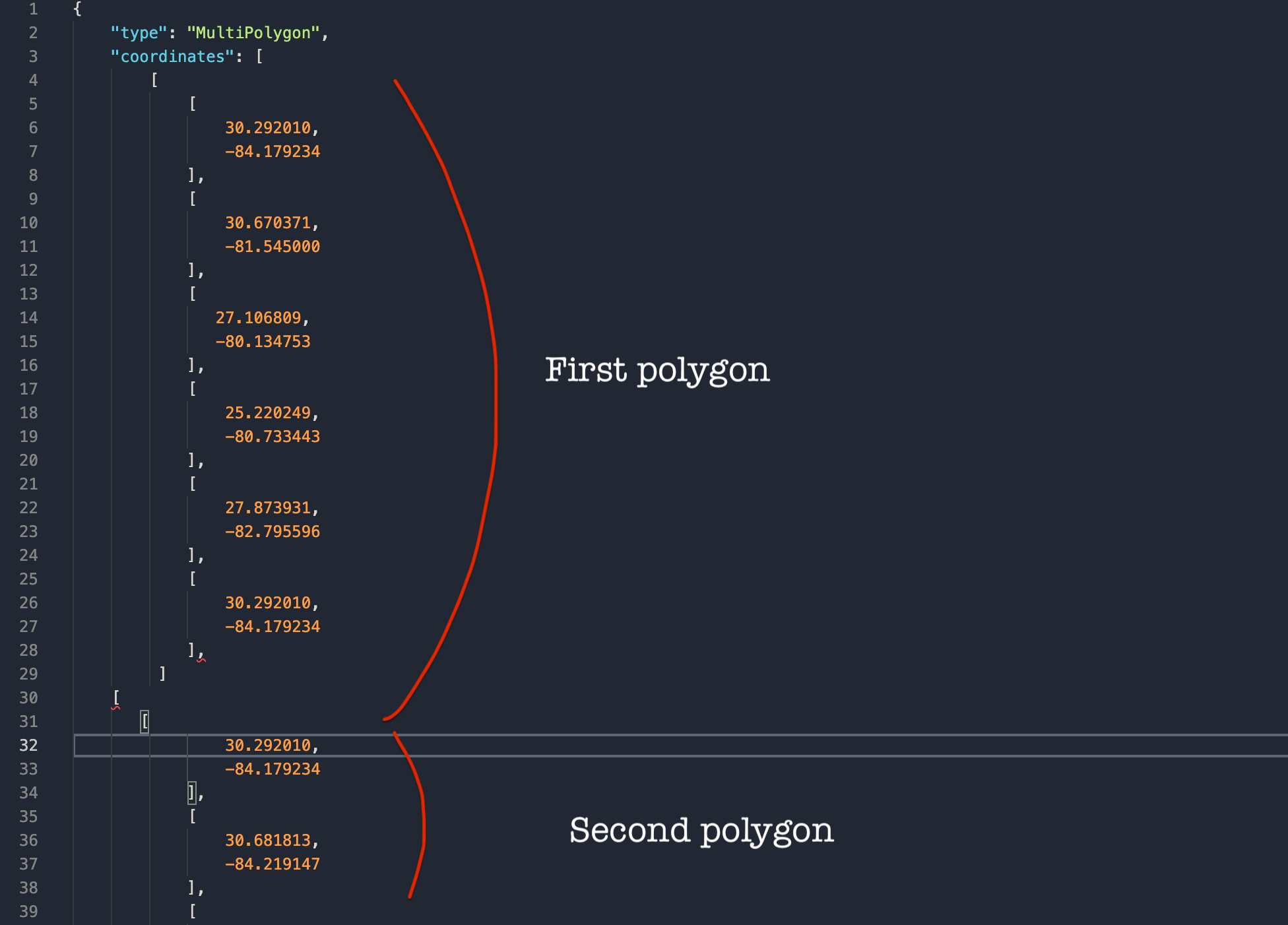

The most complicated and most flexible is Multipolygon in custom geolocation.

It is best used for areas that are difficult to fit into one polygon or areas that are quite far away geographically but still have to be scraped together (for instance, an island together with the mainland).

For instance, this multipolygon would include two polygons since that seems to be the only way to encompass all of Florida.

Two pentagons to scrape all places in Florida

This is how you insert the coordinates of a multipolygon (by combining two or more of them). Use the readme 🔗 as your formatting guide.

Creating a multipolygon for complex shapes

Future improvements 🛠

While it might be quite complex, we are constantly working on improving Google Maps Scraper so it can deliver even better quality results. Currently, there seems to be one core issue when trying to scrape large areas: empty or lesser populated territories. Be it a natural park, a body of water, or even a desert with obviously no restaurants, hospitals, or other specific public places, the scraper will try to find them there anyway - which wastes its resources. The same works for the outskirts of cities or towns where there are higher chances for large parks, forests, or industrial areas with no pins.

In the future, we want the scraper to be able to skip those territories and instead focus on the denser parts of the map. These are three directions we're working in:

Scrape only pins and skip deserted or natural areas once the scraper reaches them.

Focus on cities and densely populated areas and not the outskirts.

Enable more locations at once to run as multiple scrapers in parallel that automatically scale and combine all results.

Give it a try, and let us know how the scraper worked for you. Meanwhile, here's one example of how you can apply data extracted from Google Maps (recommended for data scientists working with web data):