How to scrape data from any website to Excel

4 ways you can import any website into Excel and 3 simple steps for using the best method.

Hi, we're Apify. The Apify platform gives you access to 1,500+ tools to extract data from popular websites. Check us out.

Attracting over 750 million users worldwide, Microsoft Excel is one of the most popular applications for organizing, formatting and calculating data. Excel files are a great example of structured data, allowing users to easily manipulate datasets and gain insight into gathered data thanks to tables, graphs, and other visualizations.

But if you use Excel a lot, sooner or later, you’re probably gonna come across online data that you’d like to feed to your table. In this article, we’ll show you four ways you can import any website into a structured Excel file.

Manual copy and pasting

Implementing web queries

Excel’s VBA language

Using web scraping tools

There are multiple ways of turning a website into an Excel table, some more complicated than others

Manual copy and pasting

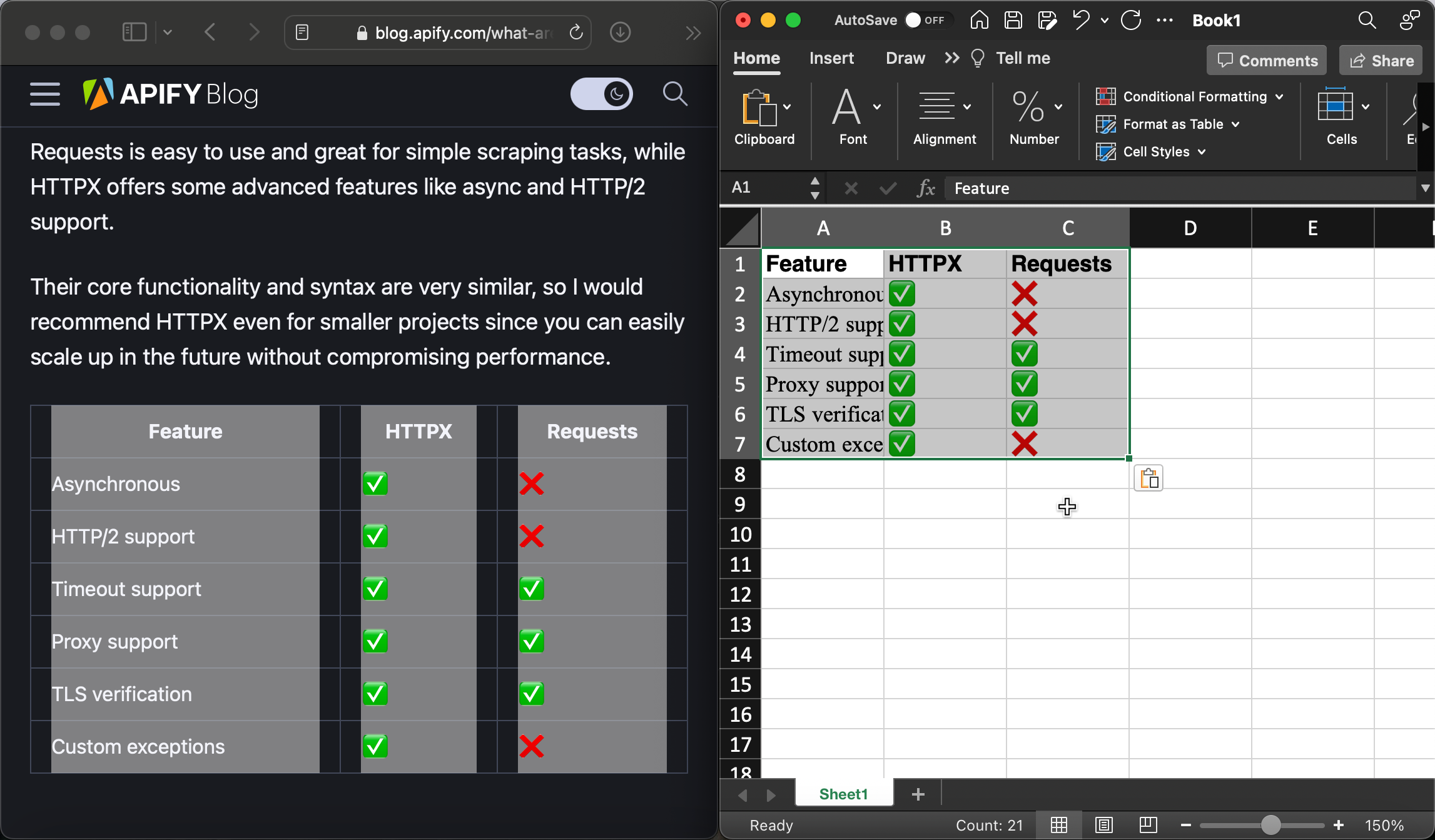

Depending on the amount and format of the data you want to pull into Excel, manual copying and pasting is always an option - although often a lengthy and tedious one. It is still used in areas such as manual product mapping, which require further interaction and input from the user. It can also be useful when the data you want to extract is already in a table format: for instance, here we simply copy and paste the HTML table from our Python libraries blog article. But keep in mind that the vast majority of online data is unstructured, so this method will probably be irrelevant for most use cases.

Copy and pasting is only effective when the data is already structured

Implementing web queries

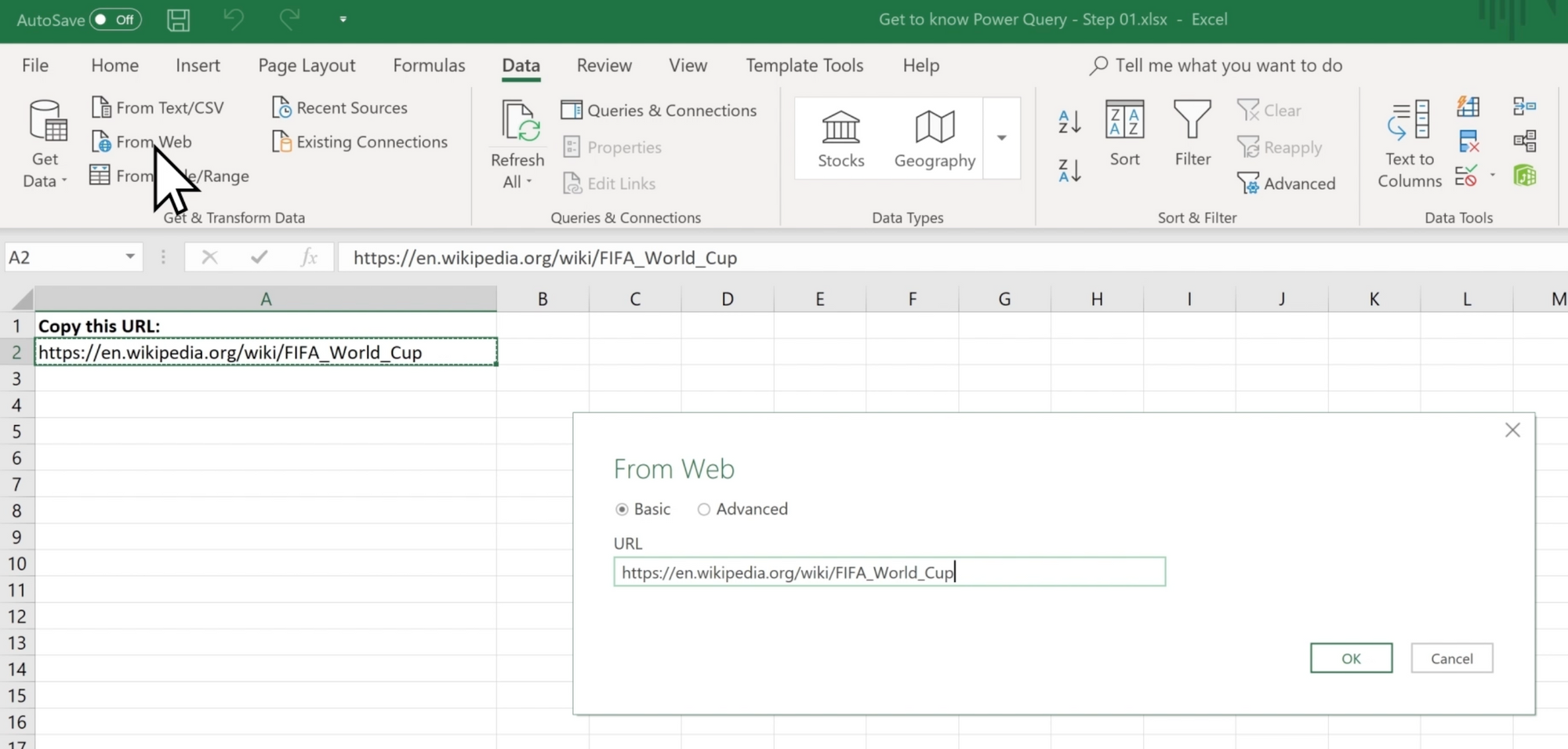

If you’re using Windows, you can use Microsoft’s Power Query feature set to get automated structured data straight to your Excel sheet. Simply go to the Data tab, select From Web, and input the URL of the website you’d like to export data from. The software will then process the page and find any usable tables that it can pull from the page. After you have the data, you can refresh the query at any time, updating your sheet at the click of a button. While this method is useful for keeping your dataset up to date, it can also only work with data that’s already structured. Another disadvantage is that Power Query’s ability to get web data is limited to the Windows operating system, so Mac and other OS users are out of luck here.

source: Microsoft Support

Excel’s VBA language

Microsoft’s VBA (Visual Basic for Applications) is an implementation of the event-driven programming language Visual Basic into the Office ecosystem. It allows users to access functions beyond what is available in Microsoft Office, letting them automate processes, create macros, custom forms or customize applications to fit their specific business needs. But implementing data extraction into your Excel sheet with VBA requires advanced programming and web scraping knowledge. You will also need to install libraries such as Selenium or Microsoft XML HTTP based on if you want to extract data from a webpage that runs scripts. Since using this method is fundamentally the same as web scraping, it might be better to use data extraction tools outside of Microsoft’s ecosystem for a more flexible workflow with more in-depth documentation.

Using web scraping tools

Web scraping lets you automate the process of data extraction, minimizing the effort put into gathering web data in bulk. Unlike the first two methods, you can scrape any unstructured data from the website, be it product names, prices, headings, descriptions, and so on. This data is then stored in a structured format, and depending on the API you’re using to scrape the web, it can also be exported into machine-readable formats such as XLS (Microsoft Excel).

You can build your own web scraper with Node.js or Python libraries such as Requests, HTTPX, Selenium, or Playwright, or use pre-made tools where you simply input your desired URL or search query. Apify is a full-stack platform that lets you do both - you can build (or import) your own scraper with our web scraping templates or use a ready-made Actor from Apify Store to get the job done.

So, let’s take a look at how you can get data from any website in 3 simple steps using APIs found on Apify Store:

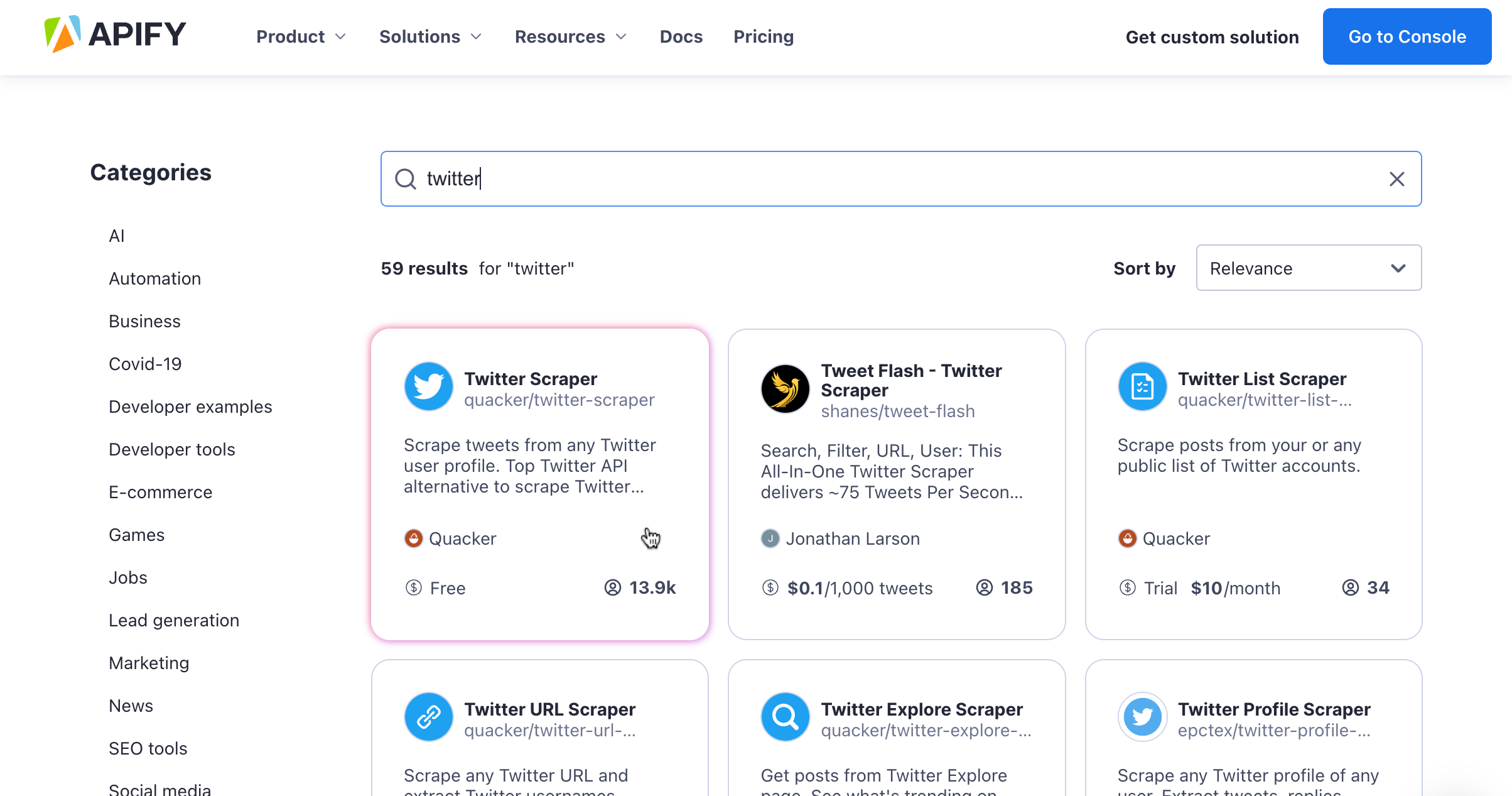

Step 1. Find the right tool

Apify Store works just like any other app store: just search for an Actor you’d like to use based on the website you want to scrape. Most scrapers are free, although you may come across some paid ones - no need to worry; even these offer a free trial without the need for a credit card. After you find your scraper, click the Try for free button. For this example, we’re going to be using Twitter Scraper.

Find a suitable Actor on Apify Store

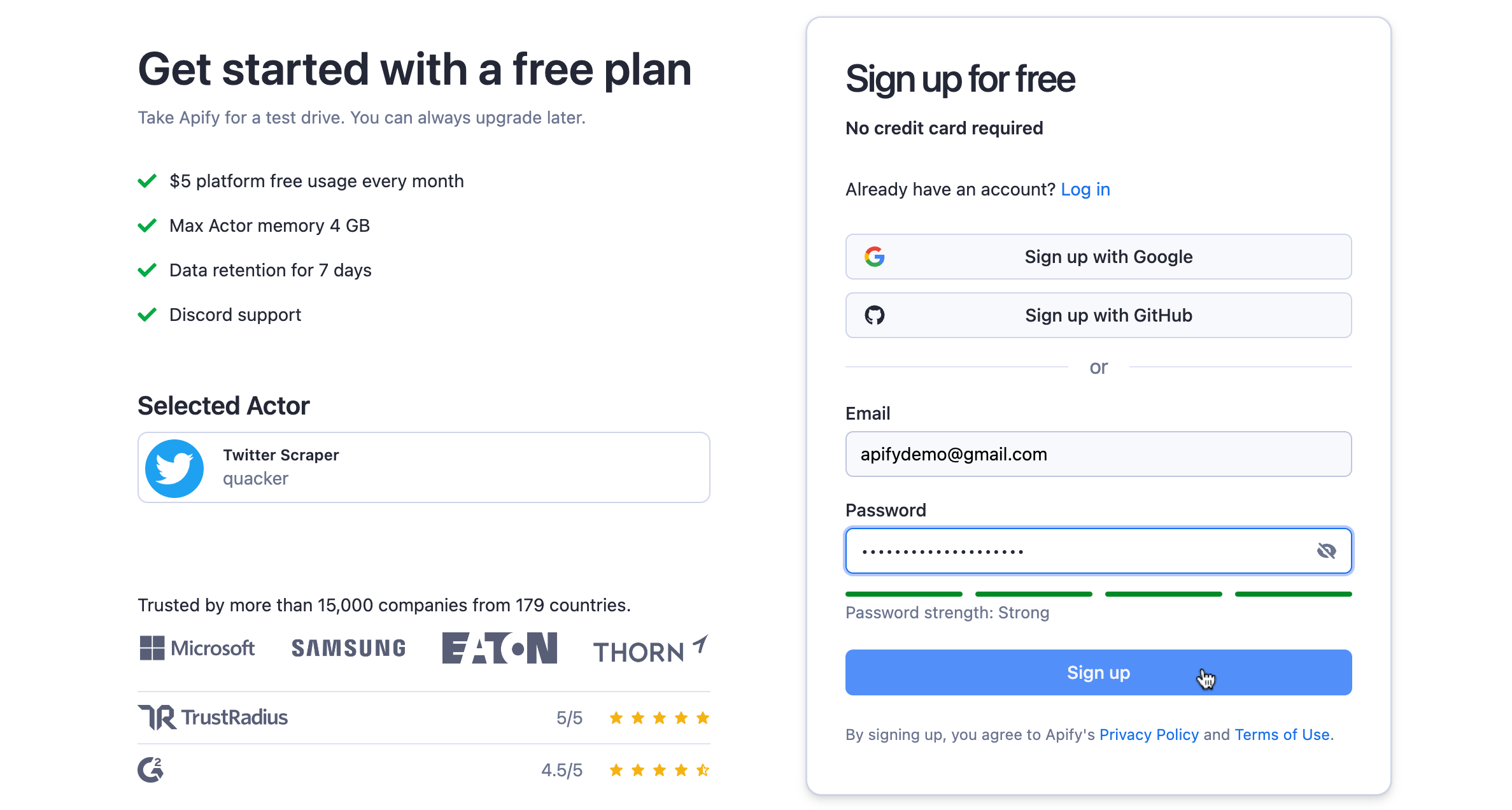

You’ll then be prompted to sign in or make an account if you don’t already have one. You can speed up the process by signing in through your Google or GitHub profile.

Sign in or make an account to access Apify Console

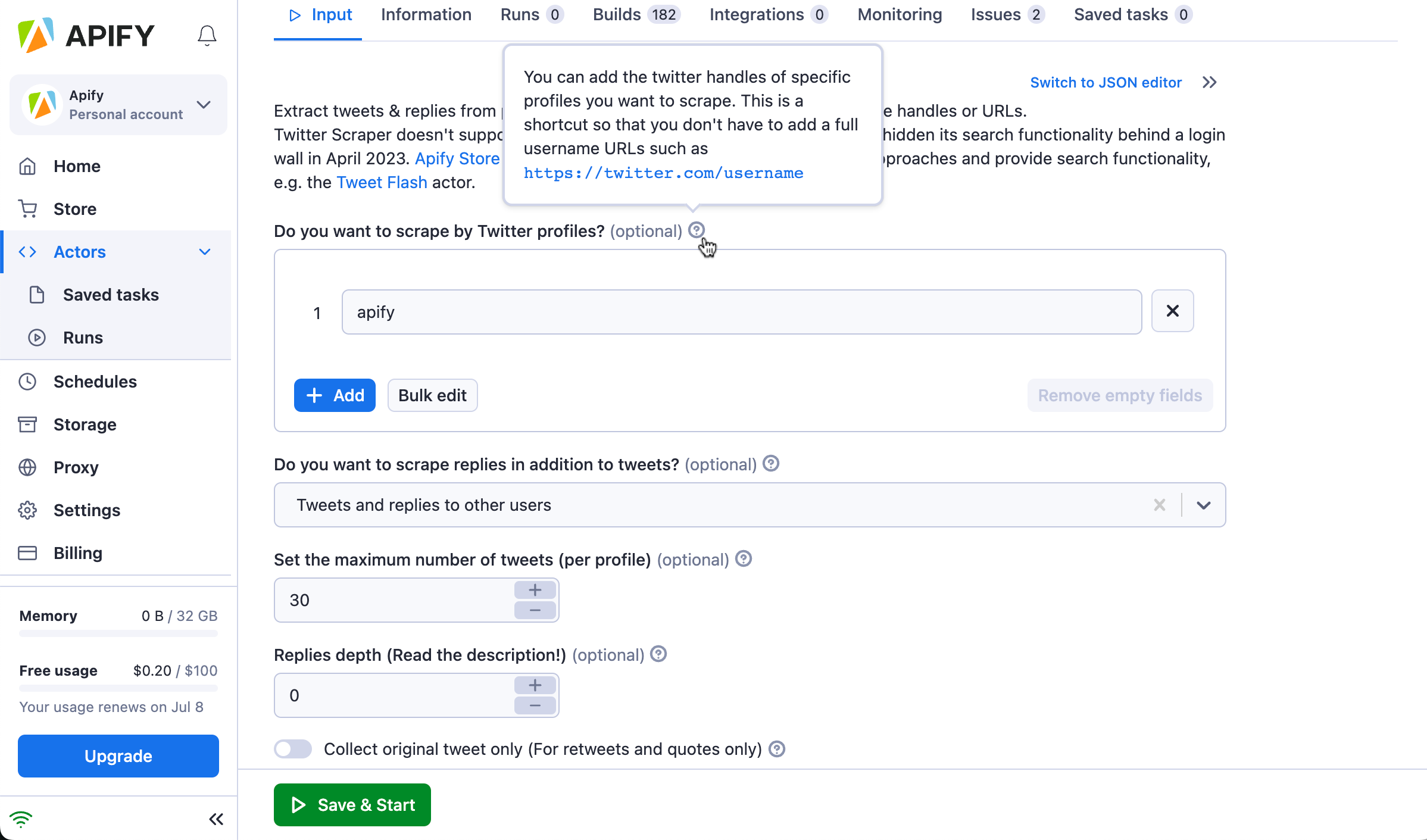

Step 2. Choose the data you want to scrape

Now, fill in the input schema to tell your Actor what data you want from the website of your choice. With Twitter Scraper, we decided to scrape the top 30 results from the handle @apify (yup, we’re also on Twitter, so don’t forget to give us a follow). This feature is especially relevant if you know how to use mail merge and want to gather data that helps you tailor outreach or PR messages, for instance.

If you’re not sure what the individual inputs mean, hover your mouse over the question mark next to them to get an explanation. The Actor readme (you can find this on the Actor’s page in Apify Store) can also be of great help if you feel lost when filling out the schema.

Fill out the Actor's input schema

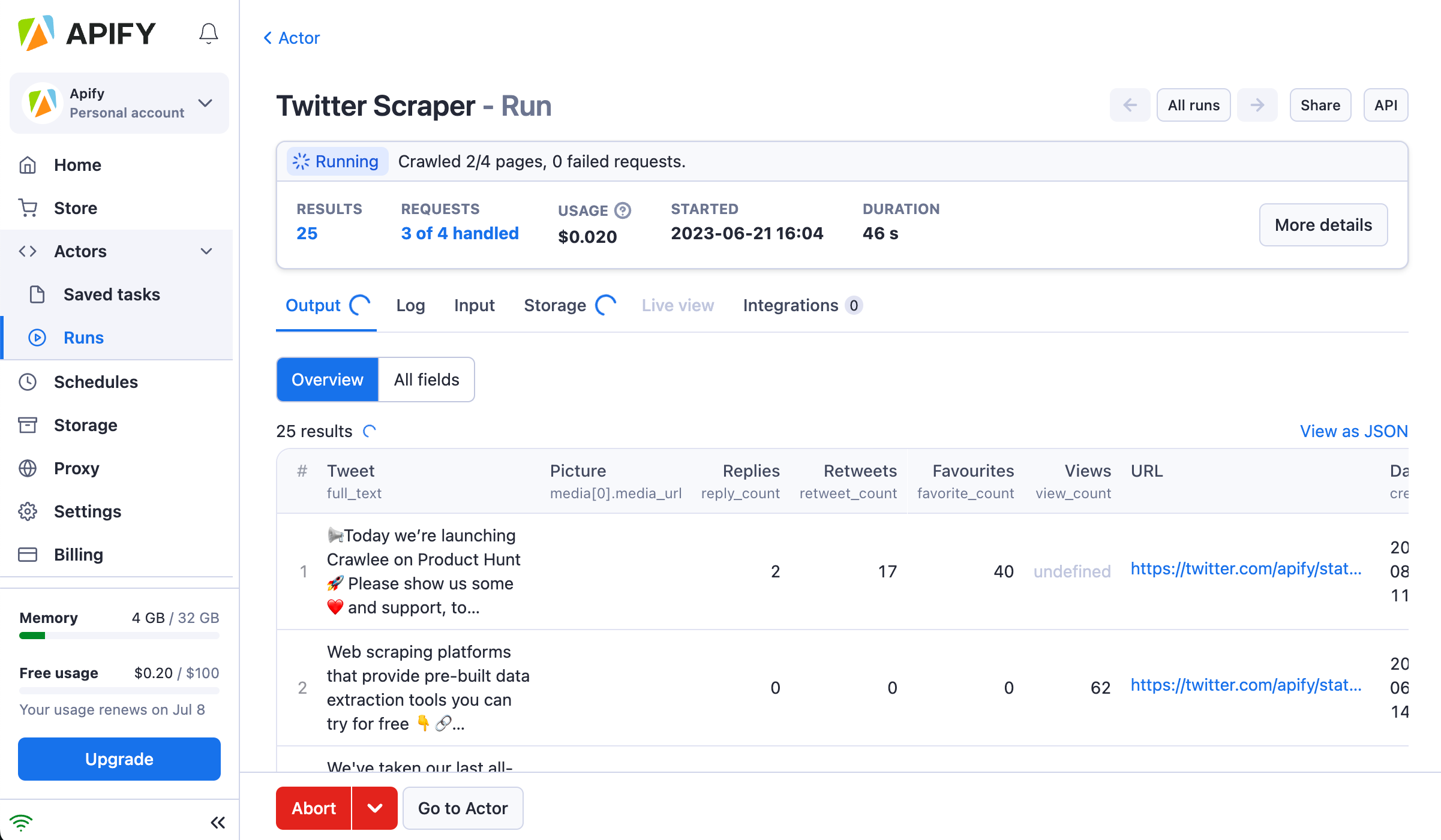

Step 3. Run the scraper and download your results in Excel

After you’re done filling out the input, hit Save & Start to kick off the Actor run. Now just wait for the scrape to finish.

You can watch your results load in the Output tab

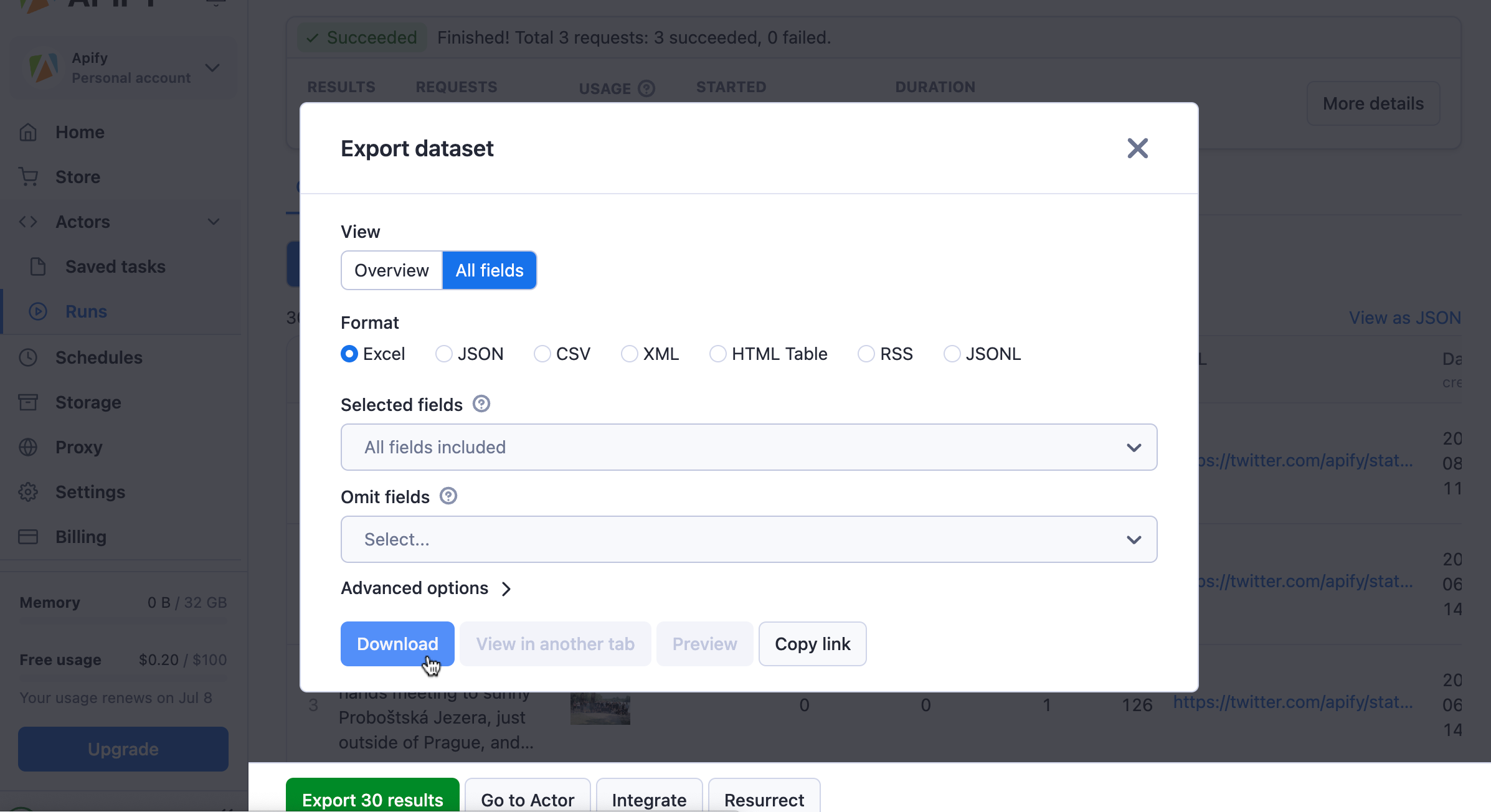

As soon your run is finished, you can hit the Export results button at the bottom of the UI. Here you can choose your desired output format (in our case Excel) and which fields from the table you want (or don’t want) to export.

Download your data in an Excel format

❗ Tip: Not every scraper has the Output / Overview tab. If that’s the case, go to Storage to get the full data in Excel.

And that’s it! Now that you have your data, it’s time to explore the world of web scraping with a bigger pool of Actors: Interested in social media? Try our Instagram, TikTok, or Reddit scrapers. If you’d prefer to scrape product listings and their sellers, give the Amazon, eBay, or AliExpress scrapers a go. Looking for new hires? Scrape job listings from Indeed or Glassdoor. You can even further integrate these tools with other platforms, to bring your web scraping workflow to the next level 🚀

Missing an Actor in Apify Store? You can contribute by monetizing your own code or publishing an idea as an inspiration for our developer community. And if you need any advice from web scraping and automation enthusiasts, there’s always our Discord channel.